For over a decade, RemoveGrain has been one of the most used filters for all kinds of video processing. It is used in SMDegrain, xaa, FineDehalo, HQDeringMod, YAHR, QTGMC, Srestore, AnimeIVTC, Stab, SPresso, Temporal Degrain, MC Spuds, LSFmod, and many, many more. The extent of this enumeration may seem ridiculous or even superfluous, but I am trying to make a point here. RemoveGrain (or more recently RGTools) is everywhere.

But despite its apparent omnipresence, many encoders – especially those do not have years of encoding experience – don't actually know what most of its modes do. You could blindly assume that they're all used to, well, remove grain, but you would be wrong.

After thinking about this, I realized that I, too, did not fully understand RemoveGrain. There is barely a

script that doesn't use it, and yet here we are, a generation of new encoders, using the legacy of our

ancestors, not understanding the code that our CPUs have executed literally billions of times.

But who

could

blame us? RemoveGrain, like many Avisynth plugins, has ‘suffered’ optimization to the point of complete

obfuscation.

You can try to read the code

if you want to, but trust me, you don't.

Fortunately, in October of 2013, a brave adventurer by the name of tp7 set upon a quest which was thitherto believed impossible. They reverse engineered the open binary that RemoveGrain had become and created RGTools, a much more readable rewrite that would henceforth be used in RemoveGrain's stead.

Despite this, there is still no complete (and understandable) documentation of the different modes. Neither

the

Avisynth wiki nor tp7's own documentation nor any of the other guides manage to

accurately

describe all modes. In this article, I will try to explain all modes which I consider to be insufficiently

documented. Some self-explanatory modes will be omitted.

If you feel comfortable reading C++ code, I would recommend reading the code yourself.

It's not very long and quite easy to understand: tp7's rewrite on Github

- Clipping

- Clipping: A clipping operation takes three arguments. A value, an upper limit, and a lower limit. If the value is below the lower limit, it is set to that limit, if it exceeds the upper limit, it is the to that limit, and if it is between the two, it remains unchanged.

- Convolution

-

The weighed average of a pixel and all pixels in its neighbourhood. RemoveGrain exclusively uses 3x3

convolutions, meaning for each pixel, the 8 surrounding pixels are used to calculate the

convolution.

Mode 11, 12, 19, and 20 are just convolutions with different matrices.

Mode 5

The documentation describes this mode as follows: “Line-sensitive clipping giving the minimal change.”This is easier to explain with an example:

|

|

|

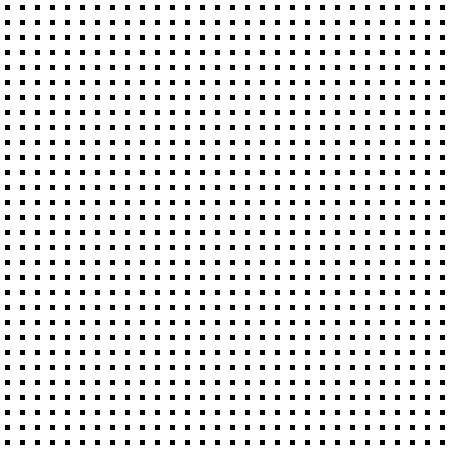

Left: unprocessed clip. Right: clip after RemoveGrain mode 5

Mode 5 tries to find a line within the 3x3 neighbourhood of each pixel by comparing the center pixel with two opposing pixels. This process is repeated for all four pairs of opposing pixels, and the center pixel is clipped to their respective values. After computing all four, the filter finds the pair which resulted in the smallest change to the center pixel and applies that pair's clipping. In our example, this would mean clipping the center pixel to the top-right and bottom-left pixel's values, since clipping it to any of the other pairs would make it white, significantly changing its value.

To visualize the aforementioned pairs, they are labeled with the same number in this image.Due to this, a line like this could not be found, and the center pixel would remain unchanged:

Mode 6

This mode is similar to mode 5 in that it clips the center pixel's value to opposing pairs of all pixels in its neighbourhood. The difference is the selection of the used pair. Unlike with mode 5, mode 6 considers the range of the clipping operation (i.e. the difference between the two pixels) as well as the change applied to the center pixel. The exact math looks like this where p1 is the first of the two opposing pixels, p2 is the second, c_orig is the original center pixel, and c_processed is the center pixel after applying the clipping.

This means that a clipping pair is favored if it only slightly changes the center pixel and there is only little difference between the two pixels. The change applied to the center pixel is prioritized (ratio 2:1) in this mode. The pair with the lowest diff is used.

Mode 7

Mode 7 is very similar to mode 6. The only difference lies in the weighting of the values.Mode 8

Again, not much of a difference here. This is essentially the opposite of mode 6.Mode 9

In this mode, only the difference between p1 and p2 is considered. The center pixel is not part of the equation.Everything else remains unchanged. This can be useful to fix interrupted lines, as long as the length of the gap never exceeds one pixel.

|

|

|

The center pixel is clipped to pair 3 which has the lowest range (zero, both pixels are black).

Should the calculated difference for 2 pairs be the same, the pairs with higher numbers (the numbers in the image, not their values) are prioritized. This applies to modes 5-9.

>>> d = d.std.PlaneStats()

>>> d.get_frame(0).props.PlaneStatsAverage

0.0

>>> d = d.std.PlaneStats()

>>> d.get_frame(0).props.PlaneStatsAverage

0.05683494908489186

This is explained by the different handling/interpolation of edge pixels, as can be seen in this comparison.

Edit: This may also be caused by a bug in the PlaneStats code which was fixed in R36. Since 0.05 is way

too high for such a small difference, the PlaneStats bug is likely the reason.

Note that the images were resized. The black dots were 1px in the original image.

|

|

|

| The source image | clip.std.Convolution(matrix=[1, 2, 1, 2, 4, 2, 1, 2, 1]) | clip.rgvs.RemoveGrain(mode=12) |

Mode 13

Since this is a field interpolator, the middle row does not yet exist, so it cannot be used for any calculations.

It uses the pair with the lowest difference and sets the center pixel to the average of that pair. In the example, pair 3 would be used, and the resulting center pixel would be a very light grey.

Mode 14

Same as mode 13, but instead of interpolating the top field, it interpolates the bottom field.Mode 15

Avisynth Wiki

“It's the same but different.” How did people use this plugin during the past decade?

Anyway, here is what it actually does:

First, a weighed average of the three pixels above and below the center pixel is calculated as shown in the

convolution above. Since this is still a field interpolator, the middle row does not yet

exist.

Then, this average is clipped to the pair with the lowest difference.

In the example, the average would be a grey with slightly above 50% brightness. There are more dark pixels

than bright ones, but

the white pixels are counted double due to their position. This average would be clipped to the pair with

the smallest range, in this case bottom-left and top-right. The resulting pixel would thus have the color of

the top-right pixel.

Mode 16

Same as mode 15 but interpolates bottom field.

Mode 21

The value of the center pixel is clipped to the smallest and the biggest average of the four surrounding pairs.Mode 22

Same as mode 21, but rounding is handled differently. This mode is faster than 21 (4 cycles per pixel).I feel like I'm missing a proper ending for this, but I can't think of anything

Back to index

Back to index